There have been discussions about AI writing programs like ChatGPT in the academy. The past few months have seen a flurry of activity with college administrators calling emergency meetings, professors changing their assignments, and educators writing essays (some perhaps written by AI?) that range in reaction from the nonchalant to the apocalyptic about the fate of college writings, the future of the humanities, and the outlook of higher education.

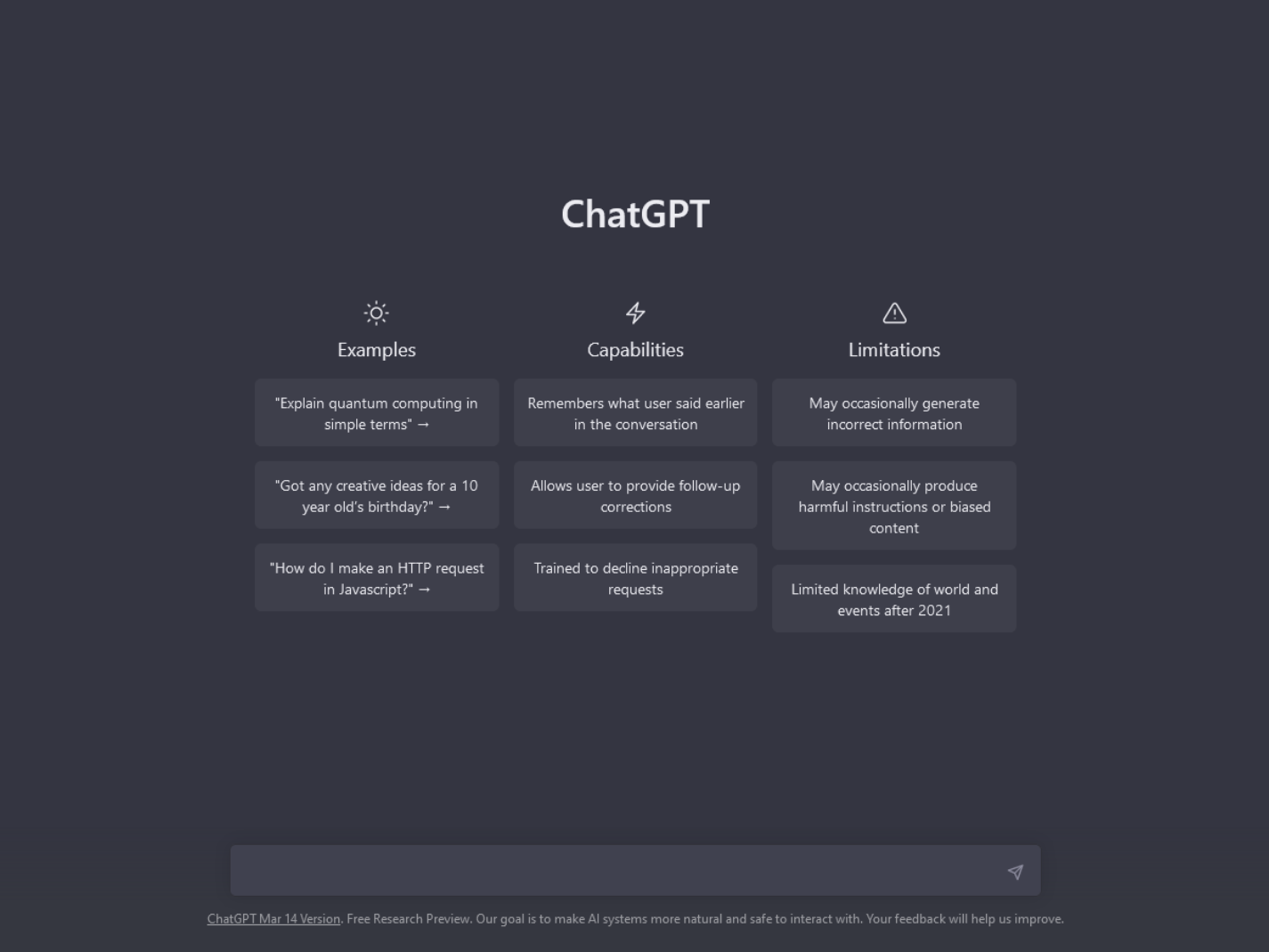

For those not familiar with AI programs like ChatGPT, they are chatbots—computer programs to simulate conversations with humans—that predict what words and phrases should come next. As an AI, they continually learn as they gather more data, from human interaction and from texts like articles, books, and websites. The GPT-3 model, for example, was “trained” on a text set that included 8 million documents and over 10 billion words.

The conversation about ChatGPT so far has mainly focused on the effects it will have on the humanities. However, I think that humanities professors will be relatively better off compared to their faculty peers when AI is fully adopted by the university. The problem won’t be the mass unemployment of English, history, philosophy, classics, or theology professors; rather, the problem will be the mass unemployment of STEM (science, technology, engineering, mathematics) and pre-professional faculty.

[Related: “Don’t be a Luddite with ChatGPT”]

That is because the subjects taught by STEM and pre-professional faculty appear to be most likely to be replaced by AI in the future. These subjects require numerical critical thinking in making assessments about populations—something which AI does as well as, if not better, than humans now. For example, some AI programs have a better diagnostic accuracy than human doctors, and last year an AI’s stock picks generated a higher price return than the S&P 500. Some are currently discussing whether AI will replace engineers, nurses, and accountants in the near future.

Even more depressing for STEM and pre-professional faculty is the rise of alternative credentialing programs. Businesses like Google, Bank of America, GM, IBM, and Tesla have removed the college degree requirement for any positions in their companies. As AI improves its numerical and linguistic critical thinking skills, companies are likely to incorporate AI into their pre-screening and training of employees. There is also great potential for growth in alternative credential agencies, which can certify students in certain skills, and much will likely be available free online. All these trends challenge the university’s primary status as a credentialer and signaler to employers of who can think and write.

This in turn raises the question why parents should shell out tens of thousands of dollars every year for their children to attend college, when they can learn free online, get accredited elsewhere cheaper and quicker, or be trained by their employer. For the elite universities—the Harvards, the Yales, the Stanfords—this is not likely to be an issue, because the opportunity to network with children of the elite will outweigh any financial cost or lack of learning. But for those institutions in the mid- and low-tier, such as public regional comprehensive schools, AI poses an existential threat, especially if their funding model is based on STEM and pre-professional students.

[Related: “ChatGPT Can Help Students (and Teachers) Make the Grade”]

If the news about AI is bad for schools that rely on their STEM and pre-professional programs, it could be good for those universities who have a clearly defined mission and identity rooted in liberal education. If liberal education is to study something for its own sake in order for us to reflect upon who we are and what our purpose in life is, then this can be best accomplished by studying the humanities. By reading and discussing literature, history, philosophy, and other traditions of the humanities, students learn the inherent value of liberal education—to be free from the demands of necessity and calls for utility in order to be connected to what authentically makes one a human being.

With AI, the point of university education might shift. It is no longer about the acquisition of economic or critical skills, but about becoming a free and reflective human being. One enrolls in college because it is understood as an intrinsic good for human flourishing. If you want a job, go learn AI on the Internet.

Since the turn of the century, concerns about the place and relevance of liberal education in the American university have continued unabated. ChatGPT appears to put another nail in the coffin of liberal education; however, a closer look suggests it could be the key to liberal education’s resurrection. With employment demands, assessment requirements, and skill training gone, what is left for the university to do in the age of AI? To study things for their own sake—and only liberal education can provide that.

Editor’s Note: This article was originally published by RealClearWire on March 29, 2023 and is republished here with permission.

Image: I don’t know a good name., Wikimedia Commons, Creative Commons CC0 1.0 Universal Public Domain Dedication

I admit I got a good laugh out of this article. The author failed miserably to make the case STEM jobs–in particular, engineering jobs–are at risk with AI. I even took the liberty of reading some of the referenced articles, but they’re written by people (corporate executives and academics) who don’t know much at all about day-to-day engineering work performed in industry. Apparently these people believe engineering largely entails sitting in front of a computer terminal all day writing software. Actually, other than software engineers, very few spend a lot of time programming. And engineers are not data scientists who spend their days analyzing large databases.

Yes, computers can assist in engineering tasks, but using a computer in doing engineering work does not mean AI was involved. One of the references cites a professor Girolami who makes the foolish argument that, well, 40 years ago civil engineers did drafting and now that’s all automated. So what? Automation does not mean AI is involved. CAD (computer aided design) tools are used to improve productivity and create databases to assist manufacturing. They cannot come up with original designs. You don’t just feed in the specifications and the CAD tools spit out your design.

I read a couple of the nursing articles the author referred to. They state AI will improve nursing productivity and little else. Indeed, the article entitled “Rise of the Robots: Is Artificial Intelligence a Friend or Foe to Nursing Practice?, D. Watson, J. Womack and S. Papadakos, Crit Care Nurs. Q. 43(3), 2020, did not say AI jeopardizes nursing jobs. In fact, it makes just the opposite claim stating the impact of AI on the nursing field is largely unknown, unexplored and difficult to predict.

Another cooperate type, in an article referenced by the author, talked about deep learning and how it will negatively impact engineering employment down the road. Well deep learning is not about to replace engineers because it can’t do engineering design. Some of the current applications for deep learning are fraud detection, natural language processing, computer vision, and investment modeling. Impressive yes, but there’s a small problem—none of those tasks are engineering tasks. Engineers designed those deep learning systems; they were not designed by other AI systems. No self-eating watermelons here.

Many of the potential depressing situations for future STEM workers the author talks about has almost nothing to do with STEM. Very few STEM employees are involved in pre-screening and training of other employees. Even fewer STEM employees are involved with credential activities.

The author ends with “If the news about AI is bad for schools that rely on their STEM and pre-professional programs, it could be good for those universities to have a clearly defined mission…” I think the antecedent in that clause is quite weak. The case was not made.

A wonderfully written, thought-provoking essay. Thank you

As someone in the real world of STEM education, I have to laugh and laugh and laugh. And laugh.