Under the guise of student-centered priorities, institutions of higher education have relied on student evaluations of teaching (SETs) as a core component of faculty assessment. These evaluations are typically presented as a means of capturing student feedback to improve instruction and measure teaching effectiveness. However, a growing body of empirical research has revealed that SETs are deeply flawed instruments. Not only are they unreliable indicators of instructional quality, but they are also vehicles for bias. Furthermore, given that students lack discipline-specific expertise or pedagogical training, it is illogical to presume that they are positioned to provide useful feedback on how instruction should take place.

Perhaps the most pressing concern about SETs is their lack of validity. Numerous studies have demonstrated that student ratings do not connect meaningfully with actual student learning. Instructors who receive high ratings are not necessarily the ones whose students perform better or retain material more effectively. Rather, ratings are often influenced by factors unrelated to pedagogy, such as the instructor’s attractiveness, personality, or grading leniency. In fact, evidence suggests that instructors who inflate grades may receive higher evaluations, creating a perverse incentive structure that undermines academic rigor.

The reliability of SETs is also in question. Ratings can vary significantly across courses, semesters, and even among students within the same class, telling us more about students than the instructors they are asked to evaluate. The inherently subjective nature of these evaluations introduces considerable noise into the data, making it difficult to draw any meaningful conclusions about teaching effectiveness.

Beyond issues of psychometric inadequacy, SETs are demonstrably biased. Meta-analyses and institutional case studies consistently reveal that female instructors and instructors of “color” are rated more harshly, even when course materials and learning outcomes are identical. These biases can affect hiring, promotion, and tenure decisions.

The use of SETs also reflects and reinforces a problematic shift in the culture of higher education: the treatment of students as consumers. When student satisfaction becomes the metric by which teaching is judged, the incentive for educators is to entertain rather than educate. Difficult coursework, rigorous grading, and high expectations, things which should be the hallmarks of genuine learning, are penalized in evaluations that privilege likability and immediate gratification.

[RELATED: Survey Reveals College and University Presidents Failing to Adopt Neutrality Policies]

This consumer-driven model undermines the traditional academic values of intellectual challenge, critical thinking, and delayed reward. It reduces the complex, developmental process of education to a popularity contest, in which faculty must compete for approval rather than mentor students toward mastery. In doing so, it compromises the integrity of the academic mission and fosters a transactional, rather than transformative, educational experience.

Students also lack the discipline-specific knowledge and pedagogical training to determine what course content, structure, or pedagogical techniques should be employed. Given that research demonstrates that student preference and perception are not always aligned with what actually facilitates their learning, educators would be wise to take pause before relying on satisfaction surveys to make course adjustments.

Finally, feedback surveys are typically distributed anonymously. When open-ended questions are present, this gives students the license to share disrespectful or inappropriate comments in an accountability-free setting. Schools should consider holding students accountable for inappropriateness and can easily do so while still maintaining anonymity in what gets shared with instructors. Highlighting personal responsibility for improper or abusive responses on evaluations would help to curb the entitlement that students feel when doling out their immature critiques.

Student evaluations of teaching are not effective or insightful measures of faculty performance, yet despite a plethora of empirical evidence documenting their myriad flaws, they continue to be used in higher education. Just as a surgeon should not be required to incorporate feedback about how to make an incision from their uninformed patient, decisions about educational practice should not be made based on satisfaction surveys from challenge-averse, entitled, consumerist students.

In a time when higher education must take stock of its many failures, now might be a good time to correct one more and eliminate this pointless and harmful practice.

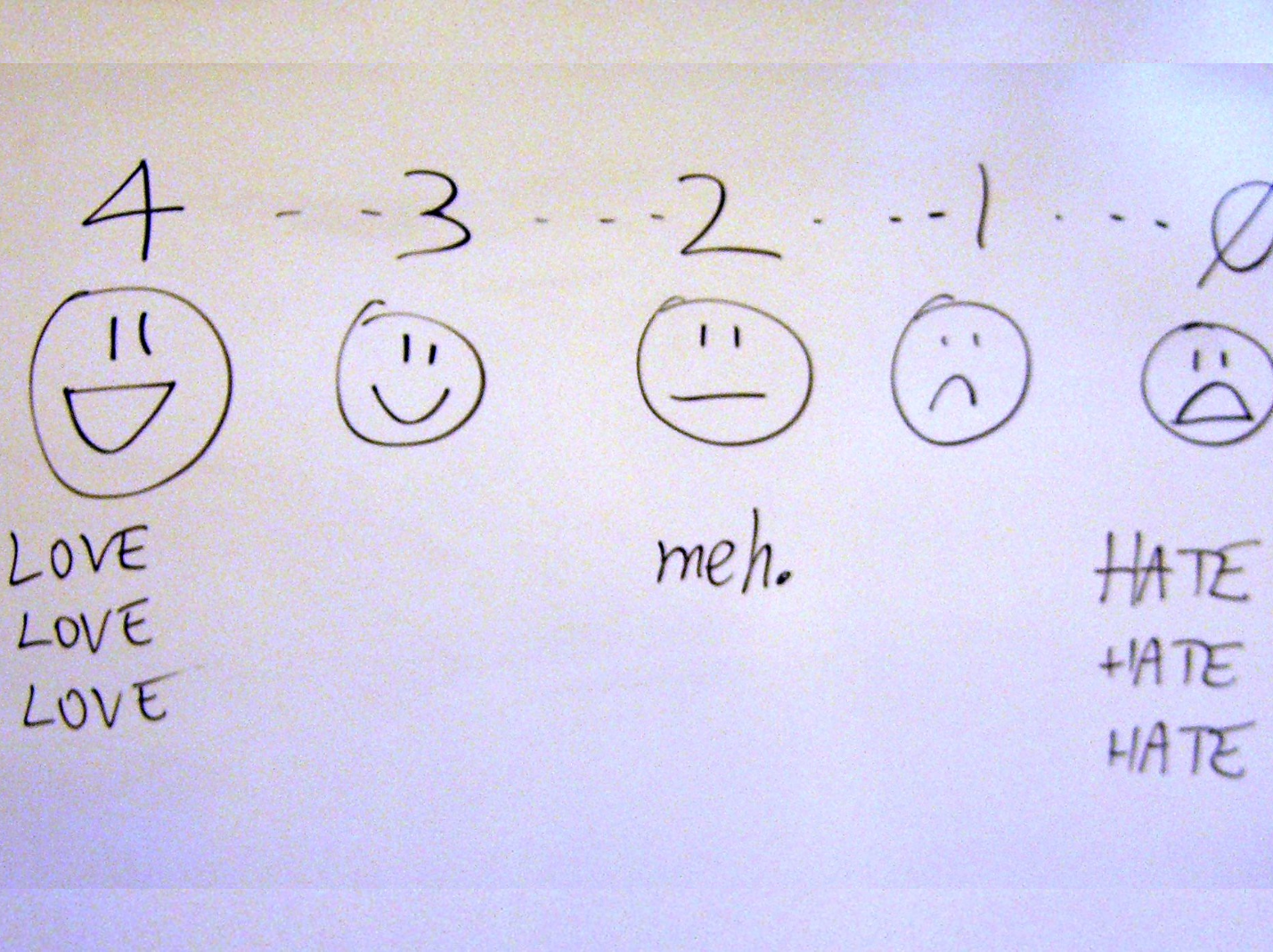

Image: “Evaluation scale” by billsoPHOTO on Flickr

And replace it with what?

First, the university should be student centered — they are the ones paying the bill, and/or having the bill paid for them.

Second, it is clear that the faculty won’t police itself — the fact that a Claudine Gay makes it to the top of Harvard shows that.

And third, if students are evaluated, why shouldn’t faculty be?

So this system is badly flawed, but what would you replace it with?

They should be replaced (augmented) by 360 degree evaluations that also include peers, deans, and others who do know how to evaluate teaching and includes the syllabus, exams, project work, etc. done in the class. This is how it’s been done in industry for the past 40 years and is considered a “best practice” as a true evaluation of overall performance. I continue to be surprised (and even shocked a bit) by the fact that academia hasn’t evolved to it yet instead continuing the lazy, and inaccurate, practice of just taking the student evaluation scores. It’s way past time to professionalize the teaching role in terms of evaluating job performance, especially as it relates to salary treatment.