Peter Wood is president of the National Association of Scholars and author of “1620: A Critical Response to the 1619 Project.”

The nation’s 250 Anniversary is only 29 months away. The National Association of Scholars is commemorating the events that led up to the Second Continental Congress officially adopting the Declaration of Independence on July 4, 1776. This is the sixth installment of the series. Find the fifth installment here. “His Majesty trusts that no opposition […]

Read More

The nation’s 250 Anniversary is only 29 months away. The National Association of Scholars is commemorating the events that led up to the Second Continental Congress officially adopting the Declaration of Independence on July 4, 1776. This is the forth installment of the series. Find the third installment here. Joe Biden — Photo by Gage […]

Read More

Editor’s Note: This article was originally published by Blaze Media on March 7, 2024 and is crossposted here with permission. The Sunshine State is now the test case of whether anti-DEI laws can have a meaningful effect in turning back these neo-racist programs. The University of Florida boldly advanced to the front of the academic line last […]

Read More

The nation’s 250 Anniversary is only 29 months away. The National Association of Scholars is commemorating the events that led up to the Second Continental Congress officially adopting the Declaration of Independence on July 4, 1776. This is the second installment of the series. Find the first installment here. In December, we celebrated the anniversary […]

Read More

The nation’s 250 Anniversary is only 29 months away. The National Association of Scholars is commemorating the events that led up to the Second Continental Congress officially adopting the Declaration of Independence on July 4, 1776. This is the second installment of the series. Find the first installment here. Last month, we celebrated the anniversary […]

Read More

Editor’s Note: This article was originally published by National Association of Scholars on January 24, 2024 and is crossposted here with permission. In the aftermath of Claudine Gay’s defenestration as president of Harvard, many conservatives, libertarians, and un-woke liberals see an opportunity to rally public support for an operation to rescue higher education. The idea has caught […]

Read More

Editor’s Note: This article was originally published by The American Conservative on January 14, 2024 and is crossposted here with permission. One might think the world had tilted an additional 40 degrees on its axis on January 2. Judging from news accounts, the northern hemisphere was plunged into darkness, and an even more bone-chilling cold than […]

Read More

Editor’s Note: This article was originally published by The Spectator World on January 3, 2024 and is crossposted here with permission. In the end Barack Obama, Penny Pritzker, 700-some members of the faculty, the mighty voice of the Harvard Crimson and the entire nomenclature of the DEI movement could not save her from herself. Claudine Gay resigned as […]

Read More

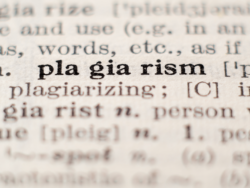

Editor’s Note: This article was originally published by The Spectator World on December 24, 2023 and is crossposted here with permission. Harvard president Claudine Gay’s troubling history of appropriating other people’s idea and words and passing them off as her own has a well-worn name: plagiarism. Every college and university in the United States prohibits plagiarism. Most present students with […]

Read More

Author’s Note: The nation’s 250 Anniversary is only 30 months away. The National Association of Scholars can hardly wait. Over the interval, we will post short commemorations of the events that led up to the Second Continental Congress officially adopting the Declaration of Independence on July 4, 1776. Some events are familiar to most Americans, […]

Read More

Editor’s Note: This article was originally published by The American Spectator on December 14, 2023 and is crossposted here with permission. Academic dishonesty strikes many people as boring. After all, it is academic. It is not like Sam Bankman-Fried, the “crypto king,” making $8 billion disappear into thin air. It is not like Florida dentist Charlie Adelson […]

Read More

How much do the diversity—equity—inclusion (DEI) movement and anti-Semitism feed on one another? There was a time when DEI advocates thought it was part of their remit to fight anti-Semitism too. In fall 2017, the University of Washington’s Department of Epidemiology issued a glossary of DEI terms that along with “ableism,” “birth assigned sex,” and […]

Read More

The Pilgrim’s treaty with the Wampanoag lasted fifty years. This would not have happened had the Wampanoag felt imposed on or exploited. Indeed, at that first Thanksgiving—a three-day feast-The Wampanoag numbered about ninety, while only fifty of the settlers were still alive. Had the Wampanoag decided to end the peaceable encounter, things would not have […]

Read More

The law school deans at places such as Yale, Harvard, Stanford, and Penn rarely turn to me for advice. Ok, never. That’s partly because I am not a lawyer but mostly because I am the head of the National Association of Scholars (NAS), an old organization that is known as one of the conservative voices […]

Read More

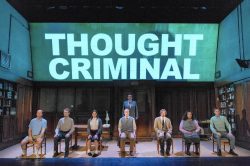

Once upon a time, liberals and conservatives could converse easily. I know that sounds implausible, but it is true. Now, I am fairly old. Fred Flintstone was just two grades ahead of me at Bedrock High. Back then we could debate questions such as whether it was a good idea to let dinosaurs turn into […]

Read More

American higher education is in crisis, but persuading students to avoid it won’t fix the underlying problems. Charlie Kirk’s new book, The College Scam: How America’s Universities Are Bankrupting and Brainwashing Away the Future of America’s Youth (Winning Team Publishing, 2022), is based on the faulty premise that America can survive without higher education. No […]

Read More

John Leo passed away on May 9. This website, Minding the Campus, was founded by John Leo, I believe around 2007. He had recently taken a position at the Manhattan Institute, and he and I met around that time. He asked me to write for MTC, and I responded with an article that he posted […]

Read More

The president of Harvard University, Larry Bacow, has joined numerous other college presidents in a rush to declare how upset he is over the killing of George Floyd and lamenting how divided the country has become. Brian W. Casey, president of Colgate University, wrote to alumni to express his “horror of watching the killing of […]

Read More

The Pulitzer Committee has awarded Nikole Hannah-Jones a prize for her lead essay in The New York Times’ “The 1619 Project.” The news doesn’t exactly come as a surprise. It was widely rumored that Hannah-Jones was under consideration, which raised the tantalizing question of how the Pulitzer Committee might find its way the past […]

Read More

The humanities are troubled – and that means the way of looking at the world is also distressed. Broadly conceived, the humanities are a filter to one’s view of the world, a way that emphasizes and celebrates what it means to be human. As a collection of academic departments that cover history, English, foreign languages, […]

Read More

Here we go again—yet another book denouncing merit and meritocracy. Merit is such a useful idea that it is hard to think that a society could do without it, and probably none does. That, however, hasn’t restrained a burgeoning industry of people who are fed up with the whole idea. “Abolish merit!” they thunder. “It […]

Read More

The official greeting of Harvard president Larry Bacow to the members of the Harvard Community — a typical welcome to new students, faculty, and parents — has touched a political nerve. Stina Chang, writing on the Asian-American news site AsAmNews picked up Bacow’s pitch to legislators to ease restrictions on international students who want to […]

Read More

Many more college students have read Ta-Nehisi Coates’ anti-white screed Between the World and Me (2015) than have read, say, works by the Nobel economist Robert Fogel, Time on the Cross: The Economics of American Slavery (1974) or Without Consent or Contract: The Rise and Fall of American Slavery (1989). I can say that with […]

Read More

The academic left’s efforts to suppress opposing views is fierce, agile, and determined. It can summon an angry mob at a moment’s notice, get the undivided attention of a busy college president, or turn on the tears over the anguish a student feels when oppressed. Whether the goal is to bar a speaker, deface a […]

Read More

The state of Alaska has unleashed a grizzly bear of a problem for the lower forty-eight. By slashing public spending on the University of Alaska by 41 percent, the governor and the legislature have defied one of the settled rules of American politics: Thou shalt not threaten public higher education. What if other states follow […]

Read More

Are Oberlin College officials serious when they say they were defending students’ free speech? That remains the college’s defense even after a jury found the college guilty of libel and interference with business in its dealings with Gibson’s Food Mart and Bakery. Gibson’s Bakery felt defamed by Oberlin College’s involvement in a campaign accusing the […]

Read More

The College Board, ever alert to cultural signals, has decided the SATs can be improved by adopting what might be called McNeil methods. In the 1930s, Charles K. McNeil, a math teacher at Riverdale Country School in New York, indulged a not very respectable hobby of gambling on the side. Growing bored with picking winners […]

Read More

Last week Google told the Claremont Institute that the Institute’s advertisements for its annual conference were banned. This act of censorship by the internet giant followed Facebook’s announcement that it was banning Milo Yiannopoulos, Alex Jones, Louis Farrakhan, and Paul Watson. Ryan P. Williams, the president of the Claremont Institute, posted his account of what […]

Read More

In higher education, disgraceful scandals and major embarrassments once arrived one by one. Now, they appear in clusters, like epidemics we should have been expecting. Take the University of Tulsa, suddenly in the grip of a “strategic plan” aimed at marketing the university to students who are career-oriented and lured by the idea of fighting […]

Read More

Should conservatives establish a new university of, by, and for conservatives? The idea has been relaunched about as many times as the Starship Enterprise. I first heard it in the 1990s, but doubtless, it is older. Most recently Frederick Hess and Brendan Bell at the American Enterprise Institute cast the vision in “An Ivory Tower […]

Read More